2025

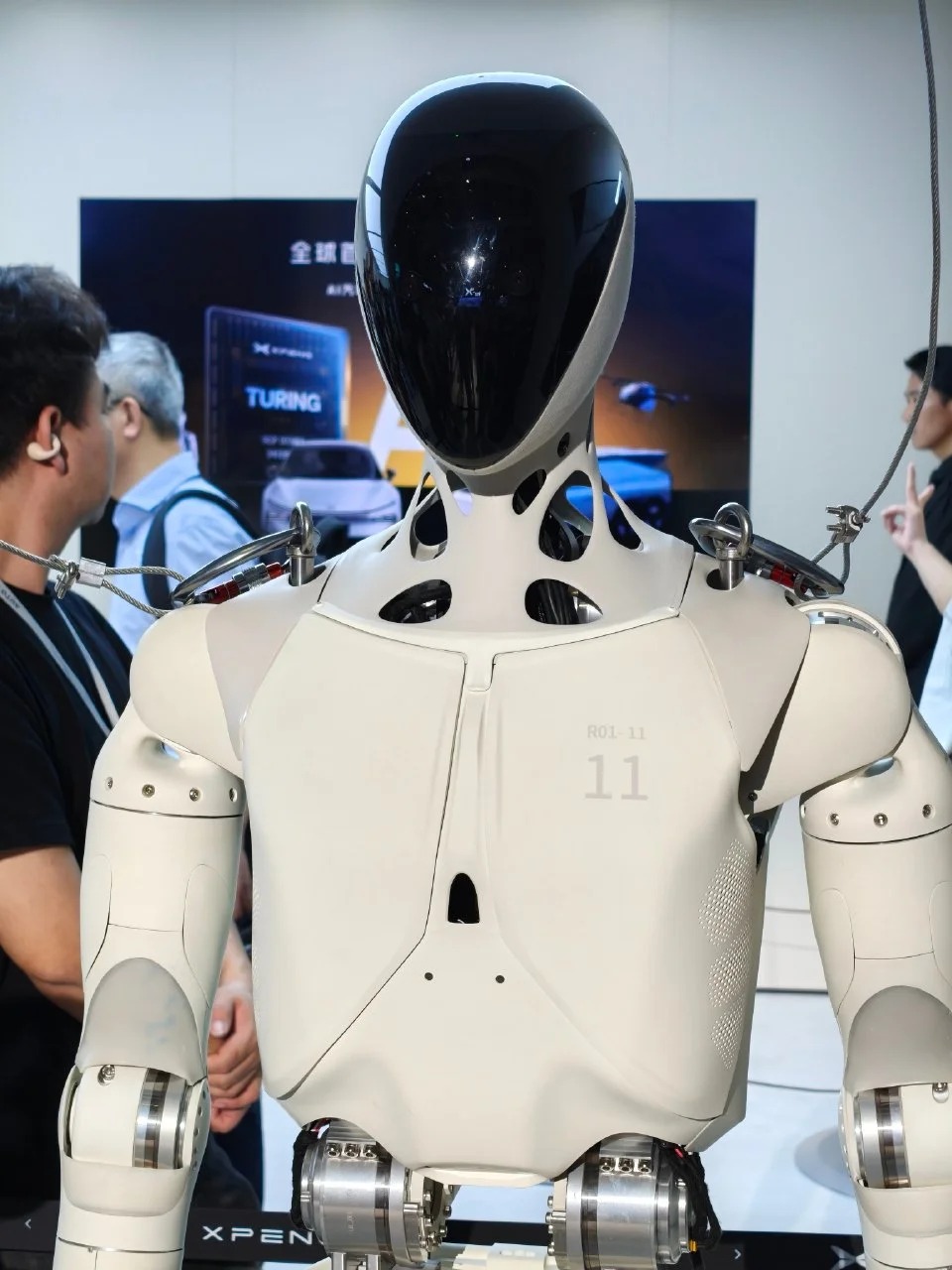

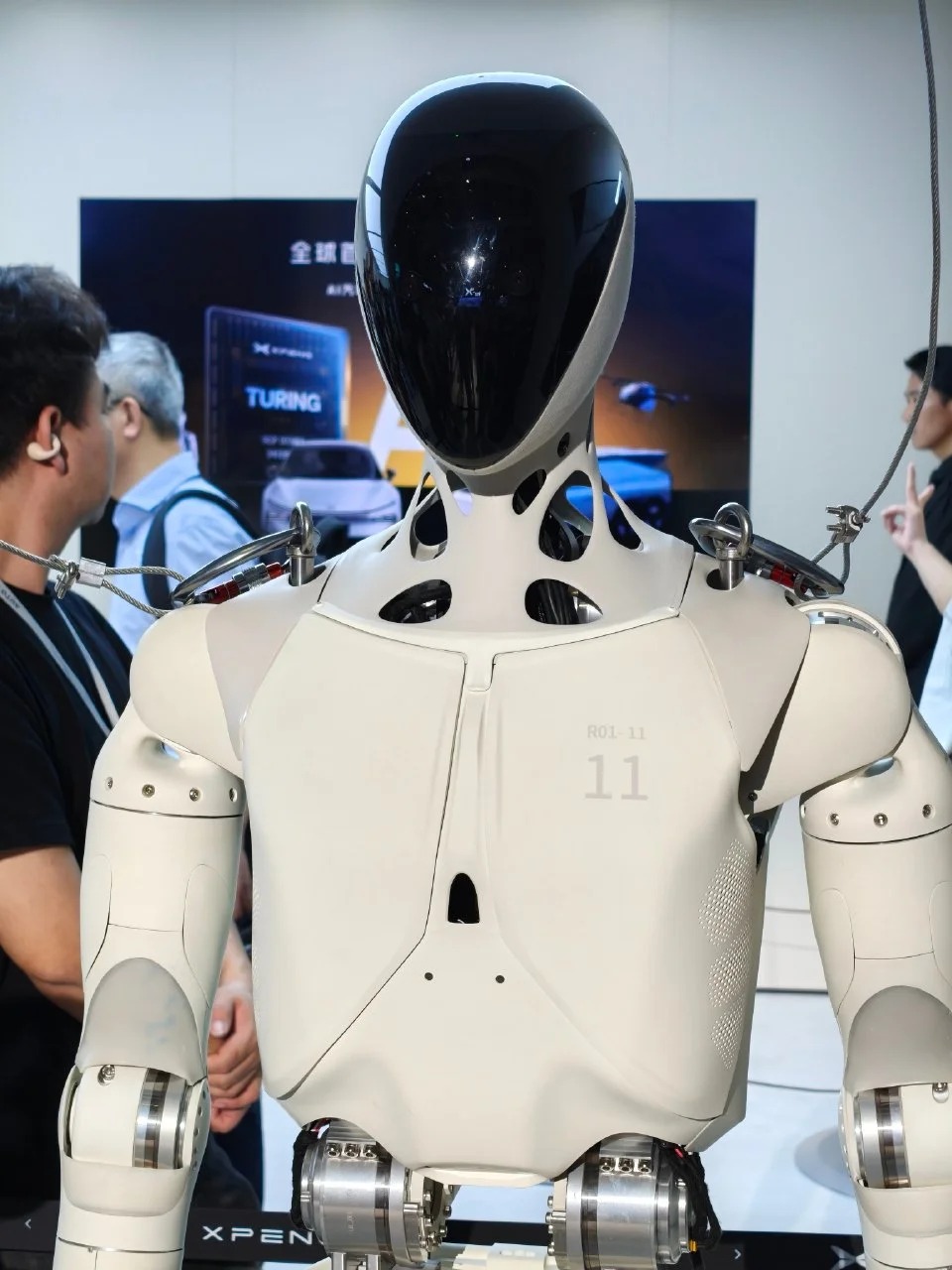

Xpeng Robotics Iron — Human-Robot Interaction Feature: Speech2Gesture

Developed and researched a Speech-to-Gesture system enabling streaming dialogue and natural, real-time translation of spoken commands into corresponding robot gestures, enhancing human-robot communication. The system and related algorithms were showcased at the Shanghai Auto Show [VIDEO] and Tsinghua University Anniversary [VIDEO].

Xpeng Robotics Iron — Human-Robot Interaction Feature: Speech2Gesture

Developed and researched a Speech-to-Gesture system enabling streaming dialogue and natural, real-time translation of spoken commands into corresponding robot gestures, enhancing human-robot communication. The system and related algorithms were showcased at the Shanghai Auto Show [VIDEO] and Tsinghua University Anniversary [VIDEO].

2024

Worked on Applying Multimodal Foundation Models to Pothole Detection in Autonomous Driving

Responsible for pre-training and fine-tuning LLaVA on pothole datasets, as well as designing and experimenting with high-resolution ViTs, such as ViT-756 and S2 ultra-high-resolution models. Compared to LLaVA 1.5, these models achieved +3.2% and +10.1% accuracy improvements, respectively, on the pothole recognition task.

Worked on Applying Multimodal Foundation Models to Pothole Detection in Autonomous Driving

Responsible for pre-training and fine-tuning LLaVA on pothole datasets, as well as designing and experimenting with high-resolution ViTs, such as ViT-756 and S2 ultra-high-resolution models. Compared to LLaVA 1.5, these models achieved +3.2% and +10.1% accuracy improvements, respectively, on the pothole recognition task.

2023

Multimodal Fusion for Localization, Autonomous Planning, and 3D Point Cloud Recognition on Unmanned Platforms

Attend a project on multimodal fusion for localization, planning, and 3D point cloud recognition in unmanned systems. Designed deep learning models to classify and segment 3D point clouds captured by depth cameras, focusing on distinguishing grass from obstacles. Proposed a universal topological layer (TopoLayer) based on persistent homology, and integrated it into mainstream architectures like PointMLP and PointNet++ to enhance recognition accuracy [VIDEO].

Multimodal Fusion for Localization, Autonomous Planning, and 3D Point Cloud Recognition on Unmanned Platforms

Attend a project on multimodal fusion for localization, planning, and 3D point cloud recognition in unmanned systems. Designed deep learning models to classify and segment 3D point clouds captured by depth cameras, focusing on distinguishing grass from obstacles. Proposed a universal topological layer (TopoLayer) based on persistent homology, and integrated it into mainstream architectures like PointMLP and PointNet++ to enhance recognition accuracy [VIDEO].